Apple races to temper outcry over child-pornography tracking system

- Share via

Apple Inc. is racing to contain a controversy after an attempt to combat child pornography sparked fears that customers will lose privacy in a place that’s become sacrosanct: their devices.

The tech giant is coaching employees on how to handle questions from concerned consumers, and it’s enlisting an independent auditor to oversee the new measures, which attempt to root out so-called CSAM, or child sexual abuse material. Apple also clarified Friday that the system would flag only users who had about 30 or more potentially illicit pictures.

The uproar began this month when the company announced a trio of new features: support in the Siri digital assistant for reporting child abuse and accessing resources related to fighting CSAM; a feature in Messages that will scan devices operated by children for incoming or outgoing explicit images; and a new feature for iCloud Photos that will analyze a user’s library for explicit images of children. If a user is found to have such pictures in his or her library, Apple will be alerted, conduct a human review to verify the contents, and then report the user to law enforcement.

Privacy advocates such as the Electronic Frontier Foundation warned that the technology could be used to track things other than child pornography, opening the door to “broader abuses.” And they weren’t assuaged by Apple’s plan to bring in an auditor and fine-tune the system, saying the approach itself can’t help but undermine the encryption that protects users’ privacy.

“Any system that allows surveillance fundamentally weakens the promises of encryption,” Erica Portnoy, a senior staff technologist at the foundation, said Friday. “No amount of third-party auditability will prevent an authoritarian government from requiring their own database to be added to the system.”

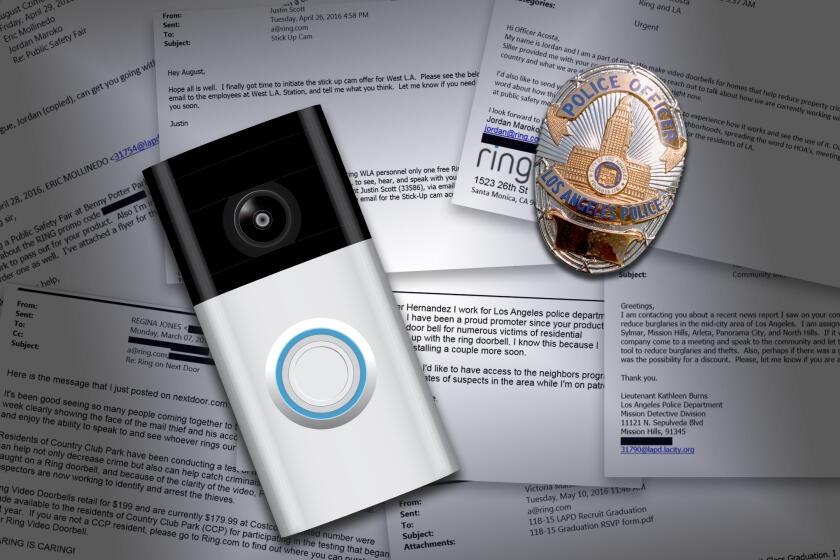

Ring provided at least 100 LAPD officers with free devices or discounts and encouraged them to endorse and recommend its doorbell and security cameras to police and members of the public.

The outcry highlights a growing challenge for Apple: preventing its platforms from being used for criminal or abusive activities while also upholding privacy — a key tenet of its marketing message.

Apple isn’t the first company to add CSAM detection to a photo service. Facebook Inc. has long had algorithms to detect such images uploaded to its social networks, while Google’s YouTube analyzes videos on its service for explicit or abusive content involving children. And Adobe Inc. has similar protections for its online services.

Google and Microsoft Corp. have also offered tools for years to organizations to help them detect CSAM on their platforms. Such measures aren’t entirely new for Apple: The iPhone maker has had CSAM detection built into its iCloud email service since 2019.

But after years of Apple using privacy as an advantage over peers, it’s under extra pressure to get its message to customers right. In a memo to employees this week, the company warned retail and sales staff that they may receive queries about the new system. Apple asked its staff to review a frequently asked questions document about the new safeguards.

The iCloud feature assigns what is known as a hash key to each of the user’s images and compares the keys with ones assigned to images within a database of explicit material.

Some users have been concerned that they may be implicated for simply storing images of, say, their baby in a bathtub. But parents’ personal images of their children are unlikely to be in a database of known child pornography, which Apple is cross-referencing as part of its system.

Setting a threshold of about 30 images is another move that could ease privacy fears, though the company said that the number could change over time. Apple initially declined to share how many potentially illicit images need to be in a user’s library before the company is alerted.

Apple also addressed concerns about governments spying on users or tracking photos that aren’t child pornography. It said its database would be made up of images sourced from multiple child-safety organizations — not just the National Center for Missing & Exploited Children, as was initially announced. The company also plans to use data from groups in regions operated by different governments and said the independent auditor will verify the contents of its database.

Apple representatives, however, wouldn’t disclose the operators of the additional databases or who the independent auditor will be. The company also declined to say whether the components of the system announced Friday were a response to criticism.

In a briefing, the Cupertino, Calif.-based company said that it already has a team for reviewing iCloud email for CSAM images, but that it will expand that staff to handle the new features. The company also published a document detailing some of its privacy upgrades to the system.

Apple has already said it would refuse any requests from governments to use its technology as a means to spy on customers. The system is available only in the U.S. and works only if a user has the iCloud Photos service enabled.

A corresponding feature in the Messages app has also sparked criticism from privacy advocates. That feature, which can notify parents if their child sends or receives an explicit image, uses artificial intelligence and is separate from the iCloud photo feature. Apple acknowledged that announcing the two features at the same time has fueled confusion because both technologies analyze images.

The move has raised other questions about how Apple handles users’ content. Despite using end-to-end encryption for messages in transit within its iMessage texting service and several parts of its iCloud storage system, Apple doesn’t allow users to encrypt their iCloud backups. That means that Apple or a bad actor could potentially access a user’s backup and review the material. The company has declined to say whether it plans to add encryption to iCloud backups.

Apple’s main message to customers and advocates is that it isn’t creating a slippery slope by combating CSAM. But Portnoy of the Electronic Frontier Foundation is unconvinced.

“Once you’ve built in surveillance, you can’t call that privacy-preserving,” she said.

More to Read

Inside the business of entertainment

The Wide Shot brings you news, analysis and insights on everything from streaming wars to production — and what it all means for the future.

You may occasionally receive promotional content from the Los Angeles Times.