Column: AI investors say they’ll go broke if they have to pay for copyrighted works. Don’t believe it

Followers of high finance are familiar with the old principle of privatizing profits and socializing losses — that is, treating the former as the rightful property of investors and shareholders while sticking the public with the latter. It’s the principle that gave us taxpayer bailouts of big banks and the auto companies during the last recession.

Investors in artificial intelligence are taking the idea one step further in fighting against lawsuits filed by artists and writers asserting that the AI development process involves copyright infringement on a massive scale.

The AI folks say that nothing they’re doing infringes copyright. But they also argue that their technology itself is so important to the future of the human race that no obstacles as trivial as copyright law should stand in their way.

The only way AI can fulfill its tremendous potential is if the individuals and businesses currently working to develop these technologies are free to do so lawfully and nimbly.

— Andreessen Horowitz

They say that if they’re forced to pay fees to copyright holders simply for using their creative works to “train” AI chatbots and other such programs, most AI firms might be forced out of business.

Frank Landymore of Futurism.com had perhaps the most irreducibly succinct reaction to this assertion: “Boohoo.”

Get the latest from Michael Hiltzik

Commentary on economics and more from a Pulitzer Prize winner.

You may occasionally receive promotional content from the Los Angeles Times.

The truth is that the investment community sees AI as a potential goldmine. One study placed the infusion of investment cash into the market in the last quarter alone at nearly $18 billion — rising higher even as investments in other startup categories have been shrinking.

Measured against that torrent, the AI industry’s claim that having to comply with the ownership rights of writers and artists would wreck their financial models falls a little flat.

Before we go further, a brief primer on the copyright issue.

AI Chatbot developers “train” their systems by plying them with trillions of words, phrases and images found on the internet or in specialized databases; when a chatbot answers your question, it’s summoning up a probabilistic string drawn from those inputs to produce something bearing a resemblance — often a surprising resemblance — to what a human might produce. But the output is mostly a simulacrum of human thought, not the product of cogitation.

Meta’s LLaMA chatbot was trained on a database of 200,000 books, including mine, without pay. How should authors like me think about that?

As I wrote recently, three of my books are listed in the database of hundreds of thousands of books used to train at least some chatbots.

Copyright holders have asserted that this process amounts to copyright infringement, because the AI firms haven’t secured permission to use these works or paid fees for doing so. AI advocates say it’s nothing of the kind — that their exploitation of the works is exempt from copyright claims through the doctrine of “fair use.” More of that in a moment.

First, let’s examine the argument that AI is too important to be derailed by copyright claims.

The quintessential expression of this idea has come from Andreessen Horowitz, a Silicon Valley venture investment firm that has sunk millions of dollars into more than 40 AI startups, via the firm’s submission to the U.S. Copyright Office for the agency’s public inquiry into AI copyright issues.

In its comment, the firm stated: “It is no exaggeration to say that AI may be the most important technology our civilization has ever created, at least equal to electricity and microchips, and perhaps even greater than those innovations.”

A few points about this.

First, it is no exaggeration to say that this statement may be the most self-serving pile of bombastic twaddle Silicon Valley has ever created.

The firm gives away its game in the final sentence of that paragraph: “The only way AI can fulfill its tremendous potential is if the individuals and businesses currently working to develop these technologies are free to do so lawfully and nimbly.” In other words: Everyone should get out of our way.

Second, most of its claims involve what lawyers would call assuming facts not in evidence.

Andreessen Horowitz writes, for example, that “AI will increase productivity throughout the economy, driving economic growth, creation of new industries, creation of new jobs, ... allowing the world to reach new heights of material prosperity.”

It may be fair to say that these outcomes are aspirations of AI advocates (at least some of them), but saying they will happen? All we can say for sure is: not yet.

Column: Artificial intelligence chatbots are spreading fast, but hype about them is spreading faster

Will artificial intelligence make jobs obsolete and lead to humankind’s extinction? Not on your life

Third, just as an aside, electricity wasn’t created by civilization, but by Mother Nature. Civilization may have invented ways to exploit electricity, but it was around even before the primordial ooze first coalesced on Earth into living creatures.

Lest anyone think that AI investors are only in it for the money, Andreessen Horowitz caps off its comment by arguing that “U.S. leadership in AI is not only a matter of economic competitiveness—it is also a national security issue. ... We cannot afford to be outpaced in areas like cybersecurity, intelligence operation, and modern warfare, all of which are being transformed by this frontier technology.”

But make no mistake: Money is the root of the industry’s concerns. “The bottom line is this,” Andreessen Horowitz says: “Imposing the cost of actual or potential copyright liability on the creators of AI models will either kill or significantly hamper their development.” The only survivors would be “those with the deepest pockets....A multi-billion-dollar company might be able to license copyrighted training data, but smaller, more agile startups will be shut out of the development race entirely.”

I’ll outsource the response to this assertion to the copyright advocate and tech critic David Newhoff, who writes, “If the future of U.S. national security depends on developing a for-profit generative AI to make music or paint pictures, we’re screwed.”

That brings us back to the copyright issue.

The AI gang has reason to be concerned about copyright claims. The backlash against uncompensated exploitation of copyrighted material is spreading through the court system. I reported last month on a copyright infringement lawsuit filed by writers including novelists John Grisham, George R.R. Martin, Scott Turow and other members of the Authors Guild against OpenAI, the leading AI chatbot developer, and another filed by Sarah Silverman against Meta Platforms (the parent of Facebook).

Two other lawsuits are worth noting.

One was filed in February in Delaware federal court by Getty Images, which holds the rights to untold historical and contemporary photographs, against Stability AI. Getty asserts that Stability has “copied more than 12 million photographs” from its collections, along with captions and digital data, without permission — allegedly to build a competing commercial business.

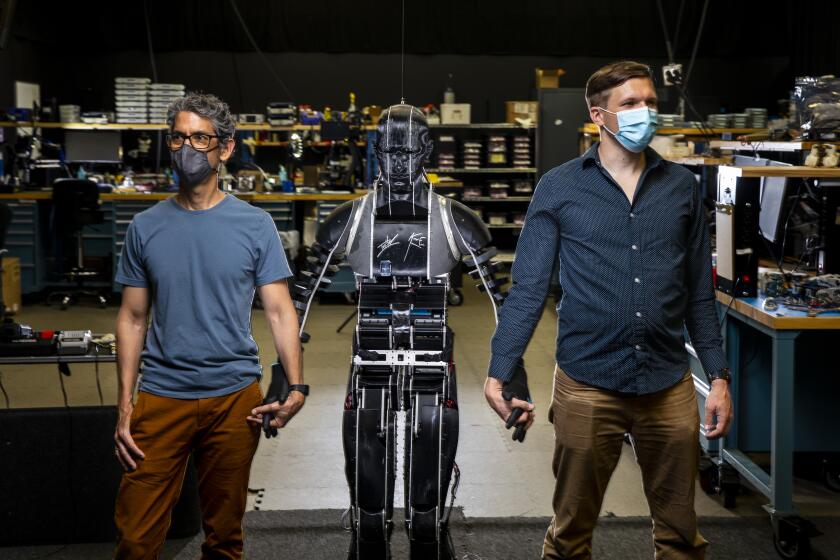

One of our most accomplished experts in robots and AI explains why we expect too much from technology. He’s fighting the hype, one successful prediction at a time.

Stability responded by asserting that Getty filed in the wrong court, and its case should be paired with a separate class-action copyright infringement lawsuit filed against Stability by several artists in San Francisco federal court. There, a judge dismissed the complaint, but allowed the plaintiffs to file an amended lawsuit by the end of this month.

The second case was filed last month in Tennessee federal court against AI developer Anthropic by dozens of music publishers. They assert that Anthropic has trained its AI bot, known as Claude, by the “mass copying and ingesting” of copyrighted song lyrics, so that Claude can regurgitate them to its users by generating “identical or nearly identical copies of those lyrics” on request. (Anthropic, which has announced a $4-billion investment from Amazon, hasn’t formally replied to the lawsuit.)

The AI industry likes to claim that the fair-use exemption is clear and established law. Indeed, Andreessen Horowitz’s comment to the Copyright Office pleads that “there has been an enormous amount of investment—billions and billions of dollars—in the development of AI technologies, premised on an understanding that, under current copyright law, any copying necessary to extract statistical facts is permitted.”

The firm continues, “A change in this regime will significantly disrupt settled expectations in this area.”

It would be hard to find a scale powerful enough to weigh the sheer arrogance of this argument. It boils down to the claim that even if the entire AI industry happens to be wrong about the application of copyright law, its investors have staked so much on an erroneous legal interpretation that we should just give them a pass.

AI sounds great, but it has never lived up to its promise. Don’t fall for the baloney.

The argument is a familiar one — that money should trump all other considerations, including the public interest.

It’s a familiar argument in Silicon Valley, which has given us such social advancements as robotaxis that drag pedestrians through the streets under their wheels, “self-driving” cars that drive themselves into emergency vehicles, and business models that impoverish workers by treating them as independent contractors rather than employees.

That leads to the question of whether the AI firms’ interpretation of fair use is anything like indisputable. The answer is no. As I reported before, fair use is what allows snippets of published works to be quoted in reviews, summaries, news reports or research papers, or to be parodied or repurposed in a “transformative” way.

AI firms argue that their use is “transformative” enough. Venture investor Vinod Khosla maintains that what chatbot trainers do is no different from how creative humans have drawn from their predecessors throughout the centuries.

“There are no authors of copyrighted material that did not learn from copyrighted works, be it in manuscripts, art or music,” Khosla wrote recently. “We routinely talk about the influence of a painter or writer on subsequent painters or writers. They have all learned from their predecessors. ... Many if not most authors or artists have talked about others that have been inspiration, influence or training materials for them.”

Nice try, Mr. Khosla, but no. To say that Meta’s chatbot was “influenced” or “inspired” by the 300,000 books and countless internet posts scraped to “train” it in the same way that Cormac McCarthy was inspired or influenced by William Faulkner or Raymond Chandler’s prose by Ernest Hemingway’s is self-evidently absurd.

In any case, what does “transformative” mean? “Millions of dollars in legal fees have been spent attempting to define what qualifies,” a review of the landscape by the Stanford Libraries says. “There are no hard-and-fast rules, only general guidelines and varied court decisions.”

We won’t have an answer until judges start weighing in on the lawsuits challenging the AI firms’ exploitation of published materials — on whether it’s thievery or fair use. And that’s assuming that all the judges end in agreement, which is not the way to bet.

In the meantime, it’s wise to ignore the AI gang’s more presumptuous arguments. The question won’t be answered by their claim to hold the secret to moving human intelligence ahead, like the monolith in “2001: A Space Odyssey.” Or by the supposed injustice of rendering the billions they’ve already invested nugatory.

It will be answered by weighing the rights of creators against the self-interest of commercial entrepreneurs. We know what they’re up to: privatizing the profits they might collect from AI applications while sticking the creative public with the costs. Will we let them pull this same stunt again?

More to Read

Get the latest from Michael Hiltzik

Commentary on economics and more from a Pulitzer Prize winner.

You may occasionally receive promotional content from the Los Angeles Times.