Tesla stopped reporting its Autopilot safety numbers online. Why?

Like clockwork, Tesla reported Autopilot safety statistics, once every quarter, starting in 2018. Last year, those reports ceased.

Around the same time, the National Highway Traffic Safety Administration, the nation’s top auto safety regulator, began demanding crash reports from automakers that sell so-called advanced driver assistance systems such as Autopilot. It began releasing those numbers in June. And those numbers don’t look good for Autopilot.

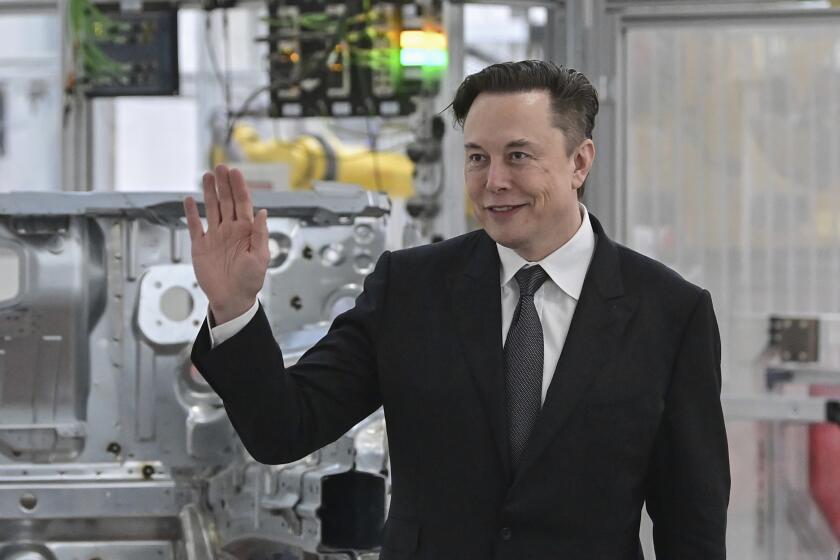

Tesla won’t say why it stopped reporting its safety statistics, which measure crash rates per miles driven. The company employs no media relations department. A tweet sent to Tesla Chief Executive Elon Musk inviting his comments went unanswered.

Tesla critics are happy to speak up about the situation, however. Taylor Ogan, chief executive at fund management firm Snow Bull Capital, held a Twitter Spaces event Thursday to run through his own interpretation of Tesla safety numbers. He thinks he knows why the company ceased reporting its safety record: “Because it’s gotten a lot worse.”

Also on Thursday, NHTSA announced it had added two more crashes to the dozens of automated-driving Tesla incidents that it’s already investigating. One involved eight vehicles, including a Tesla Model S, on the San Francisco Bay Bridge on Thanksgiving Day.Through Friday’s close, Tesla stock has lost 65% of its value this year.

Ogan, using NHTSA crash numbers, Tesla’s previous reports, sales numbers and other records, concluded that the number of reported Tesla crashes on U.S. roads has grown far faster than Tesla’s sales growth. The average monthly growth in new Teslas since NHTSA issued its standing order was 6%, he figures, while comparable crash stats rose 21%.

The Tesla Autopilot crash numbers are far higher than those of similar driver-assistance systems from General Motors and Ford. Tesla has reported 516 crashes from July 2021 through November 2022, while Ford reported seven and GM two.

As attention this week kept swirling around Tesla Inc.

To be sure, Tesla has far more vehicles equipped with driver-assist systems than the competition — an estimated 1 million, Ogan said, about 10 times as many as Ford. All else equal, that would imply Tesla ought to have a NHTSA-reported crash total of 70 since last summer to be comparable with Ford’s rate. Instead, Tesla reported 516 crashes.

Tesla’s quarterly safety reports were always controversial. They did put Tesla Autopilot in a good light: For the fourth quarter of 2021, Tesla reported one crash per 4.31 million miles driven in cars equipped with Autopilot. The company compared that with government statistics that show one crash per 484,000 miles driven on the nation’s roadways, for all vehicles and all drivers.

But statisticians have pointed out serious analytical flaws, including the fact that the Tesla stats involve newer cars being driven on highways. The government’s general statistics include cars of all ages on highways, rural roads and neighborhood streets. In other words, the comparison is apples and oranges.

None of the statistics, Tesla’s or the government’s, separate out Autopilot from the company’s controversial Full Self-Driving feature. FSD is a $15,000 option that’s more aspirational than its name implies: No car being sold today is fully autonomous, including those with FSD.

Tesla’s CEO has claimed a perfect record for the safety of his company’s robot-driving system. A public crash-reporting database contradicts him.

Autopilot combines adaptive cruise control with lane-keeping and lane-switching systems on highways. FSD is marketed as advanced artificial intelligence technology that can cruise neighborhood streets, stop and go at traffic lights, make turns onto busy streets and generally behave as if the car drives itself. The fine print, however, makes clear the human driver must be in full control and is legally liable for crashes — including those involving injuries and deaths.

The internet is filled with videos of FSD behaving badly — turning into oncoming traffic, confusing railroad tracks for roadways, running red lights and more.

The number of injuries and deaths involving Autopilot and FSD is unknown — except, perhaps, to Tesla. Publicly available safety statistics about autonomous and semiautonomous vehicles are scarce. Meanwhile, the crash reporting system in the U.S. is rooted in 1960s methodology, and no serious attempt to update it for the digital world appears to be in the works, at NHTSA or elsewhere.

NHTSA’s driver-assist crash statistic collection order, issued in 2021, depends on the car companies for accurate and complete reporting. (Musk has misstated FSD’s safety record in the past, including a claim that the technology had been involved in no crashes, when the public record made clear it had.)

Not all the information sent to NHTSA is available for public scrutiny.

Ogan, who drives an FSD-equipped Tesla, said more public information would allow a lot more transparency into robot car safety, at Tesla and other automakers. Tesla once reported its Autopilot utilization rate but no longer does so. “I’m looking at the only data available,” he said.

The California Department of Motor Vehicles has been investigating whether Tesla is violating its rule against marketing vehicles as fully autonomous when they’re not. Musk has stated clearly that the company plans to develop FSD to create a fully autonomous robotaxi that Tesla owners could rent out for extra cash. He had promised 1 million of them on the road by 2020, but that date came and went and no fully autonomous Tesla exists. The DMV declined to comment.

FSD’s safety and capabilities are, by Musk’s own admission, existential concerns — especially as Tesla stock continues to dive-bomb. In a June interview with Axios, he said that “solving” FSD is “really the difference between Tesla being worth a lot of money and being worth basically zero.”

More to Read

Inside the business of entertainment

The Wide Shot brings you news, analysis and insights on everything from streaming wars to production — and what it all means for the future.

You may occasionally receive promotional content from the Los Angeles Times.