Review: AI’s humble beginnings and potentially sinister future

On the Shelf

Genius Makers: The Mavericks Who Brought AI to Google, Facebook, and the World

By Cade Metz

Dutton: 384 pages, $28

If you buy books linked on our site, The Times may earn a commission from Bookshop.org, whose fees support independent bookstores.

Several years ago, I met a woman for coffee at Battery, a private club in San Francisco’s North Beach neighborhood where technology swells used to hang out pre-COVID-19.

The woman ran communications for Andreessen Horowitz, the famed venture capital firm whose official tagline is: “Software is eating the world.”

Our talk turned to artificial intelligence. I marveled at the wonderful things AI promised us, but I did worry about people’s jobs. “What’s an accountant displaced by AI going to do?” I asked.

“Oh, people will be able to pursue their creative passions,” she said.

For instance?

“I don’t know. Braid hair? She could set up a shop and braid hair, if that’s her passion.”

OK, then.

I thought back to this conversation while reading Cade Metz’s excellent new book, “Genius Makers: The Mavericks Who Brought AI to Google, Facebook, and the World.”

“Genius Makers” is not really a history of AI, as such. Artificial intelligence goes back at least to the 1950s. The key thing the field accomplished over most of those years was to explore a number of dead-end ideas that proved worthless or not ready for prime time. In other words, basic scientific research doing its thing.

While Metz, a reporter for the New York Times, does sketch out the early history, his focus is on the last 10 years or so, when a once-belittled AI approach known as neural networking began to insinuate itself, for good or ill, into the daily lives of humans around the world. Alexa, Google Home, Siri — all made possible with AI neural networks. Facebook’s ability to read faces in photos and identify them by name? Neural nets.

It’s not just the sinister stuff. Neural net software is helping doctors evaluate cancerous tumors and beginning to turn cars into robots that can drive themselves. Earlier this month, Sonoma County said it would start using neural net technology to help spot the earliest flames of quick-building wildfires. The possibilities are endless. But as with any powerful technology, there are downsides too. Serious downsides.

Sonoma County is adding artificial intelligence to its wildfire-fighting arsenal.

Unlike many of the books written about AI, you don’t need a science or engineering degree to learn from and enjoy this one. Anyone with an enthusiastic curiosity about science, technology and the future of human culture will find this clear-eyed, snappily written book both entertaining and valuable. You could even call it essential for any policymakers, politicians, police, lawyers, judges and decision-makers who will be contending with the social forces unleashed by artificial intelligence. Which, soon, will mean all of them.

The same technology that lets your daughter call up Cardi B’s “WAP” with a voice command is also being used for government surveillance, racial profiling and the creation of “deep fake” YouTube videos that can mimic a real person so closely it’s becoming nearly impossible to tell the difference — from fake Tom Cruise to fake Hillary Clinton to your fake brother-in-law.

Adding to the array of ethical tangles you can already see proliferating, these programs in some ways write themselves, making it difficult to look inside and figure out where an errant machine went wrong — a conundrum known as the black box problem.

Don’t worry: Metz addresses these AI species and subspecies quickly and clearly, explaining just enough of the technology to make sense of the larger human dilemmas. (Lay readers looking for more detail should also read the recently published “Evil Robots, Killer Computers, and Other Myths” by Steven Shwartz, another clearly written book that goes into a bit more depth on the underlying principles.)

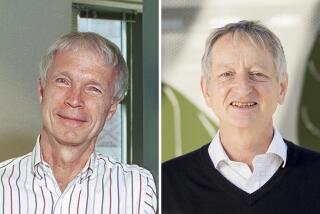

Metz starts his story with the man who might be considered the father of modern neural nets, Geoff Hinton, a Canadian researcher who eventually sold his startup company to Google for $43 million. Hinton and another key figure, Yann LeCun (who soon went to work for Facebook), issued a research paper in 2012 that showed how a deep learning system, fed enough pictures of various cats, could begin to recognize cat photos on its own.

Mitch McConnell delayed funding for election cybersecurity. This kind of dithering doesn’t help in the battle against “deep fake” videos.

For decades, both Hinton and LeCun remained obsessed with neural nets long after it had fallen out of favor with most AI researchers. Among the hurdles they faced: Enormous piles of data and prodigious processing power are required to train neural nets to recognize patterns and produce useful results. Once Google and Facebook got going, the data flowed in torrents, provided by users free of charge. At the same time, newly invented video gaming chips offered a hardware architecture that could begin to handle the volume.

Most people may realize only vaguely their own contributions to neural net research. The data are pulled mostly from you and yours, collected in vast quantities from searches on Google, posts on Instagram, personality tests on Facebook, videos on TikTok. Sometimes you are forced to contribute, with online security steps that make sure you’re “not a robot” that are in turn used to make better robots. Those squares you click on to identify a crosswalk or a stop sign or a school bus help companies build self-driving cars.

All that information is gathered in huge cloud data centers owned by the technology giants, and their machines are learning to identify facial images, body language, product preferences, sexual interests — figuring out how to shape consumer and political opinion with or without deep fakes.

Imagining the uses to which that data could be put is scary enough. But the garbage-in garbage-out principle ascribed to standard computing applies, in a different way, to neural networks. Metz describes how a neural net at Google began identifying Black people as gorillas, and how one meant to filter out pornographic images had far more false positives with Black people than white people.

One reason, Metz notes, is that the AI field in the U.S. is overwhelmingly white and male. So white people are feeding the pictures that train the machines in ways that consciously or not create racist neural nets.

Metz also addresses the theory of general artificial intelligence, or AGI, in which machines become as smart as humans or smarter and begin to take over the planet. He gives AGI advocates their due, but he clearly sides with those who think that day may never come, or at least not for a long time, and that we’re much better off focusing on immediate real-world problems caused by the technology we’re living with today.

These are important issues, and Metz’s book is the best one-stop shop to learn about them. It might encourage deeper study. It may help all of us challenge Silicon Valley’s blithe dismissal of the world it is creating: “Let them braid hair.”

“Klara and the Sun,” Ishiguro’s first new novel since winning the Nobel Prize, harks back to his masterpiece, “Never Let Me Go,” and is nearly as great.

More to Read

Sign up for our Book Club newsletter

Get the latest news, events and more from the Los Angeles Times Book Club, and help us get L.A. reading and talking.

You may occasionally receive promotional content from the Los Angeles Times.